The limits of distance: Why optimization alone isn’t enough

Reflections from a TB diagnostic network optimization

Introduction

In public health, health facility planning optimization is often supported by spatial models. These tools offer a way to match services with need, maximize coverage, and allocate limited resources more effectively. But while these models are grounded in data and logic, they still need to be validated against local realities – and that matters.

This article explores the importance of distinguishing between model-generated recommendations and real-world relevance. Drawing on an example from a national tuberculosis (TB) diagnostic network optimization, it shows how an area, flagged as a high-priority location by the model, was ultimately ruled out – not because the math was wrong, but because the system on the ground was already working well.

The takeaway: optimization can guide decisions, but context must always have the final word.

The context: Using optimization to guide TB lab placement

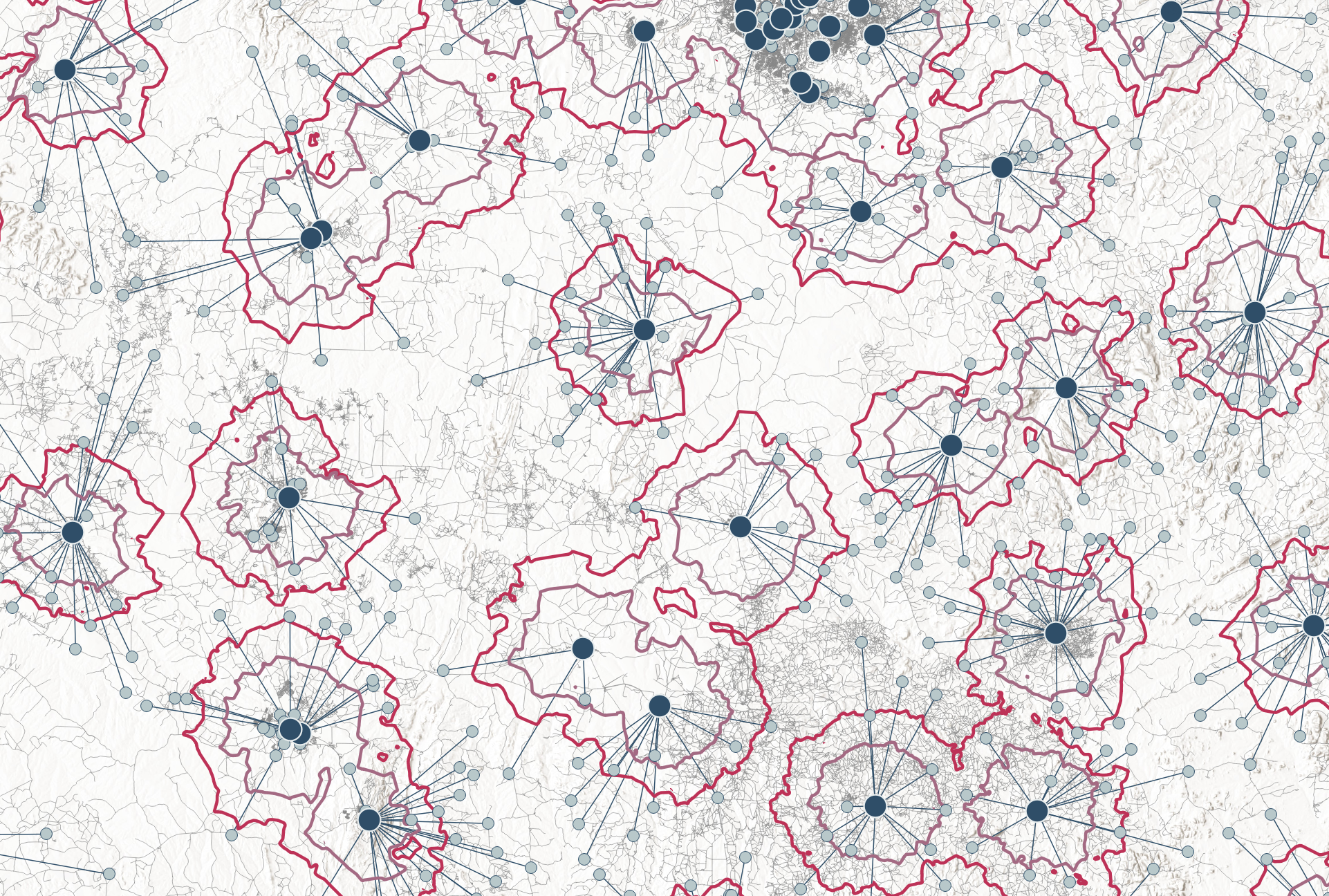

In a recent project supporting a national TB program, we set out to improve diagnostic coverage by identifying optimal locations for additional laboratories. Using facility coordinates and historical testing demand data, we ran a location-allocation model designed to minimize demand-weighted distance to the nearest lab. The objective was to reduce specimen referral distances while ensuring resources were used efficiently.

The model behaved as expected. It produced a list of potential new laboratory locations, ranked by their impact to reduce the overall demand-weighted transportation distance. Among the top-ranked sites were areas with very long distances to the nearest lab – but also relatively low demand, even when accounting for new referral linkages in an expanded lab network.

The reality check: Not all distance is delay

Before acting on the results, we reviewed the findings with local technical experts. Together, we examined specimen referral performance reports, clinical service data, and qualitative information from on-site assessments, alongside multi-year trends in demand. This step proved essential.

For one high-ranking site, we immediately flagged a mismatch. While the area was indeed geographically very far away from the nearest lab, it had a highly efficient referral mechanism. A reliable courier system ensured specimens were transported quickly, and historical clinical data confirmed that nearly all patients were indeed tested with rapid molecular diagnostics as per policy. Forecasted demand in the area remained consistently low – not enough to warrant the placement of a new (and costly) molecular diagnostic instrument.

In short, the model identified an area with poor geographic access – but not poor functional access. Setting up a new lab would have led to high capital and operational expenses (including service, maintenance, and quality assurance) without any significant improvement in diagnostic outcomes or system performance. The existing solution was already working well.

Lessons learned: metrics vs. meaning

This case highlights the need to distinguish between model-driven results and real-world relevance:

Reducing distance doesn’t guarantee better outcomes: If you run models with the objective to reduce distance, be aware that just because a facility is far from a lab doesn’t mean patients aren’t being served effectively.

System performance data matters: Metrics like testing coverage, turnaround time, and referral efficiency are crucial complements to spatial analysis. Some types of contextual information – such as insights from site visits or qualitative assessments – are difficult to translate into structured data for modeling. That makes them hard to include as input in (multi-objective) optimization, but they are still essential for interpreting model results and identifying sites where new services are unlikely to improve outcomes.

Local insight is key: Optimization models follow consistent mathematical logic – but only those familiar with the local system can determine whether a modeled recommendation reflects a meaningful need or just a theoretical improvement.

Conclusion: Smarter modeling requires grounded insight

Optimization tools can be powerful aids for evidence-based planning – but they’re no substitute for context.

Adding a new lab, reducing geographic distance doesn’t automatically lead to better patient outcomes. In some cases (as in the example above) there may not be a real problem to address. The long distance might seem like a gap in access, but if the system is functioning well, it isn’t creating delays or missed diagnoses. Establishing a new testing site would not have resulted in cost savings overall; quite the opposite. In these situations, a model focused only on minimizing distance can end up offering a neat solution to a non-issue.

The most effective public health interventions happen when quantitative models are paired with on-the-ground insight, stakeholder input, and a systems-level understanding of how services actually function.

If you’re using spatial optimization in your work, consider making a structured validation step part of your process. A model can be technically correct – but only real-world context can reveal whether it’s strategically sound.